Meta MoCha AI: Pioneering Movie-Grade Talking Character Synthesis

In the realm of film and animation production, the lifelike portrayal of characters has always been a key to captivating audiences. However, traditional character animation often demands significant time and labor. Now, with the rapid advancement of artificial intelligence, the automated generation of high-quality talking character videos has become a reality. Meta MoCha AI, developed by a research team from Meta and the University of Waterloo, is at the forefront of this innovation, promising to revolutionize the way we create cinematic content.

Task: Talking Characters

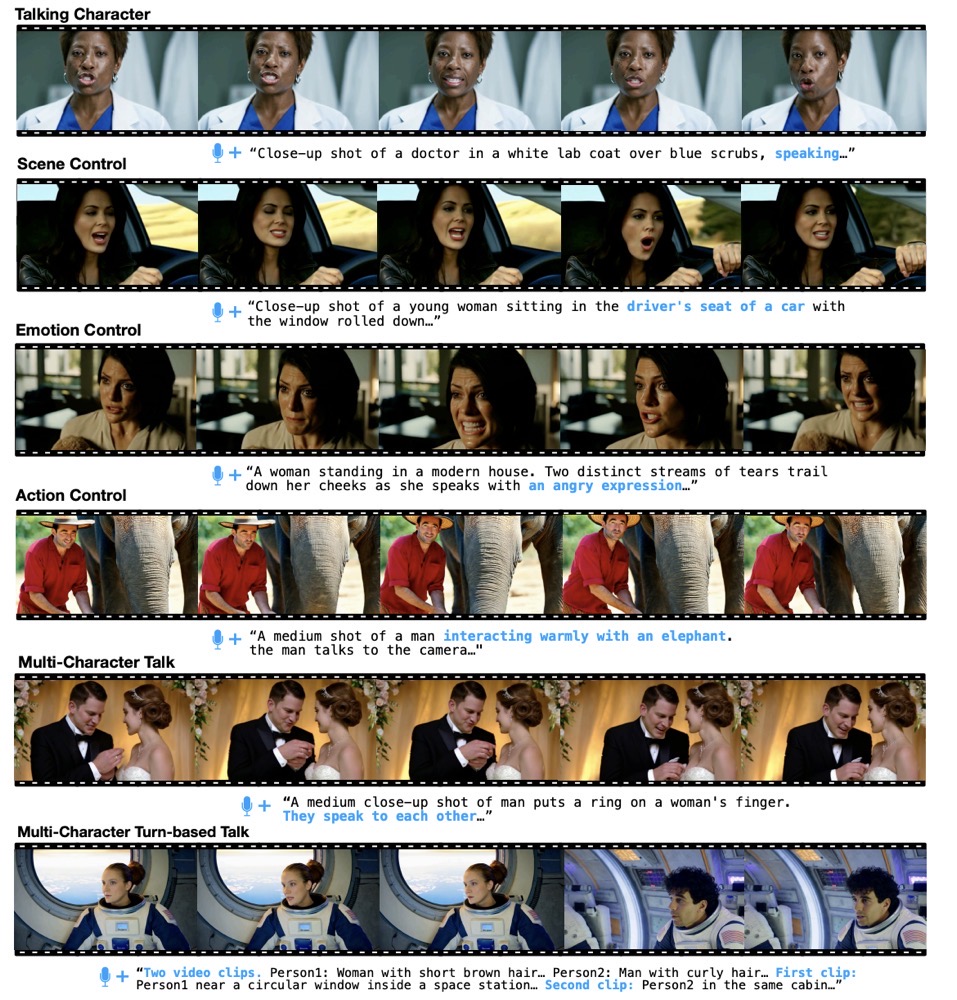

The core task of Meta MoCha AI is Talking Characters, which aims to generate digital characters that can express synchronized speech, realistic emotions, and full-body actions directly from natural language and speech inputs. This task goes beyond traditional "talking head" approaches, which focus only on facial regions, to generate characters in various camera shots (from close-ups to wide shots) and support multiple characters in a single scene.

Key Features of Talking Characters

- Full-Body Character Generation: Unlike previous methods that focused only on facial expressions and mouth movements, Meta MoCha AI generates full-body animations, making storytelling more expressive and dynamic.

- Speech and Text-Driven Animation: Characters are driven by both speech audio and descriptive text prompts. The text prompt can specify the character's appearance, environment, actions, emotions, and even camera framing, while the speech audio drives the character's lip movements, facial expressions, and body gestures.

- Realistic Lip-Sync and Emotional Expression: Meta MoCha ensures that the character's lip movements are accurately synchronized with the provided speech audio. It also generates natural and coherent facial expressions that align with both the speech content and the text prompt.

- Natural Body Movements: The generated characters perform fluid and natural body movements that correspond to the actions described in the text prompt. These movements are synchronized with the speech, creating a seamless and realistic visual experience.

- Multi-Character Interaction: Meta MoCha AI supports multi-character conversations with turn-based dialogues. This allows for dynamic interactions between characters, making it possible to generate complex scenes with multiple characters engaging in context-aware conversations.

Input and Output

Input:

- Text Prompt: Describing the character, environment, actions, facing direction (optional), position in the frame (optional), and camera framing (optional).

- Speech Audio: Driving the character's lip movements, facial expressions, and body movements.

Output:

A video featuring one or more talking characters, which can be human, 3D cartoon, or animal.

Evaluation Criteria

Meta MoCha AI generated characters are evaluated based on the following five criteria:

- Lip-Sync Quality: The character's lip movements should be accurately and temporally aligned with the provided speech audio.

- Facial Expression Naturalness: Facial expressions should be natural and coherent, aligning with both the speech content and the text prompt.

- Action Naturalness: Body movements and gestures should be natural and fluid, corresponding to the actions described in the text.

- Text Alignment: The generated actions and expressions should be consistent with the descriptions provided in the prompt.

- Visual Quality: The entire video should be visually consistent and temporally coherent without visual artifacts.

Key Technological Innovations

Meta MoCha AI's breakthrough lies in its integration of several cutting-edge technologies, setting new standards for generation quality and generalization capabilities:

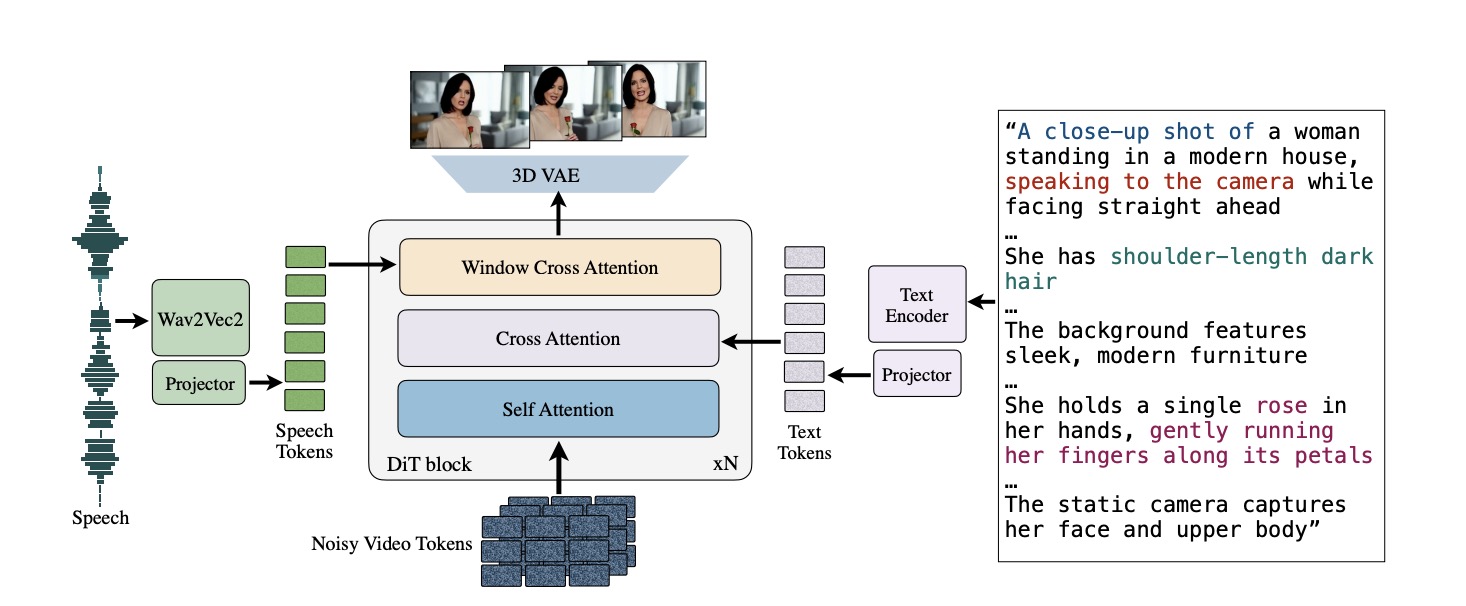

- End-to-End Training: MoCha operates without relying on external control signals (e.g., reference images or skeletal keypoints), directly generating videos from text and speech inputs. This simplifies the model architecture and enhances the diversity and generalization of character movements.

- Speech-Video Window Attention Mechanism: By aligning speech and video inputs through localized temporal conditioning, MoCha significantly improves lip-sync accuracy and speech-video alignment, ensuring that characters' mouth movements perfectly match the input speech.

- Joint Speech-Text Training Strategy: Addressing the scarcity of large-scale speech-labeled video datasets, MoCha leverages both speech-labeled and text-labeled video data for training. This approach enhances the model's ability to generalize across diverse character actions and enables nuanced control of character expressions, interactions, and environments through natural language prompts.

- Multi-Character Conversation Generation: MoCha is the first to support multi-character conversations with dynamic turn-based dialogues, overcoming the single-character limitation of previous methods and enabling cinematic, story-driven video synthesis.

Setting a New Industry Benchmark

Meta MoCha AI performance is unparalleled in multiple dimensions. Through the MoChaBench benchmark, MoCha demonstrates superior performance in lip-sync quality, facial expression naturalness, action naturalness, text alignment, and visual quality, outperforming existing baseline methods. The generated characters not only accurately convey the emotions and content of the input speech but also exhibit natural full-body movements and emotional responses based on text prompts, creating an immersive experience akin to real-life movie scenes.

Broad Application Prospects

Meta MoCha AI advent heralds revolutionary changes across various fields, including film production, animation creation, virtual reality, and educational content. Creators can now effortlessly generate high-quality character animations using simple text and speech inputs, significantly reducing the cost and time required for content creation. In the realm of virtual assistants, Meta MoCha AI can produce more lifelike and natural virtual avatars, enhancing user engagement. Additionally, MoCha's capabilities can be harnessed to create engaging educational content, bringing lessons to life through expressive character animations.

Looking Ahead

Meta MoCha AI success highlights the vast potential of artificial intelligence in video generation. As technology continues to advance, Meta MoCha AI is poised to further enhance generation quality and efficiency, supporting even more complex scenes and character interactions. Moreover, Meta MoCha AI's research provides new insights and methodologies for related fields, driving broader industry progress. Meta MoCha AI is not just a technological breakthrough but also a transformative creative tool. It empowers creators to produce cinematic-quality character animations at a lower cost and higher speed, unlocking endless possibilities for content creation.